Shirley Wu

Shirley is a second-year Ph.D. student in Stanford CS, advised by Prof. Jure Leskovec and Prof. James Zou. Previously, she obtained her B.S. degree in the School of Data Science at University of Science and Technology of China (USTC), advised by Prof. Xiangnan He.

Her recent research focuses on knowledge-grounding retrieval. In general, her research goal is to understand and further improve the "magic" of foundation and multimodal models, focusing on their capability, generalization, and adaptation, which guarantee their applicability from a practitioner's perspective.

GitHub Scholar Twitter Linkedin Email: shir{last_name}@cs.stanford.edu

What's

New

[Dec 2023] New preprint! GraphMETRO, a mixture-of-experts framework that improves GNN generalization.

[Dec 2023] New preprint! BINGO, a benchmark of evaluating bias and hallucination of GPT-4Vision.

[Nov 2023] Med-Flamingo is accepted to ML4H at NeurIPS 2023!

[Sep 2023] D4Explainer which is about diffusion for GNN explaination is accepted to NeurIPS 2023!

[Jun 2023] Pleasure to give a talk about our Discover and Cure paper for UP lab.

[Apr 2023] Discover and Cure: Concept-aware Mitigation of Spurious Correlation is accepted to ICML 2023!

[Feb 2023] Pleasure to give a talk (Youtube) about Discovering Invariant Rationales for GNNs (ICLR 2022) for DEFirst - MILA x Vector.

Research

Topics

-

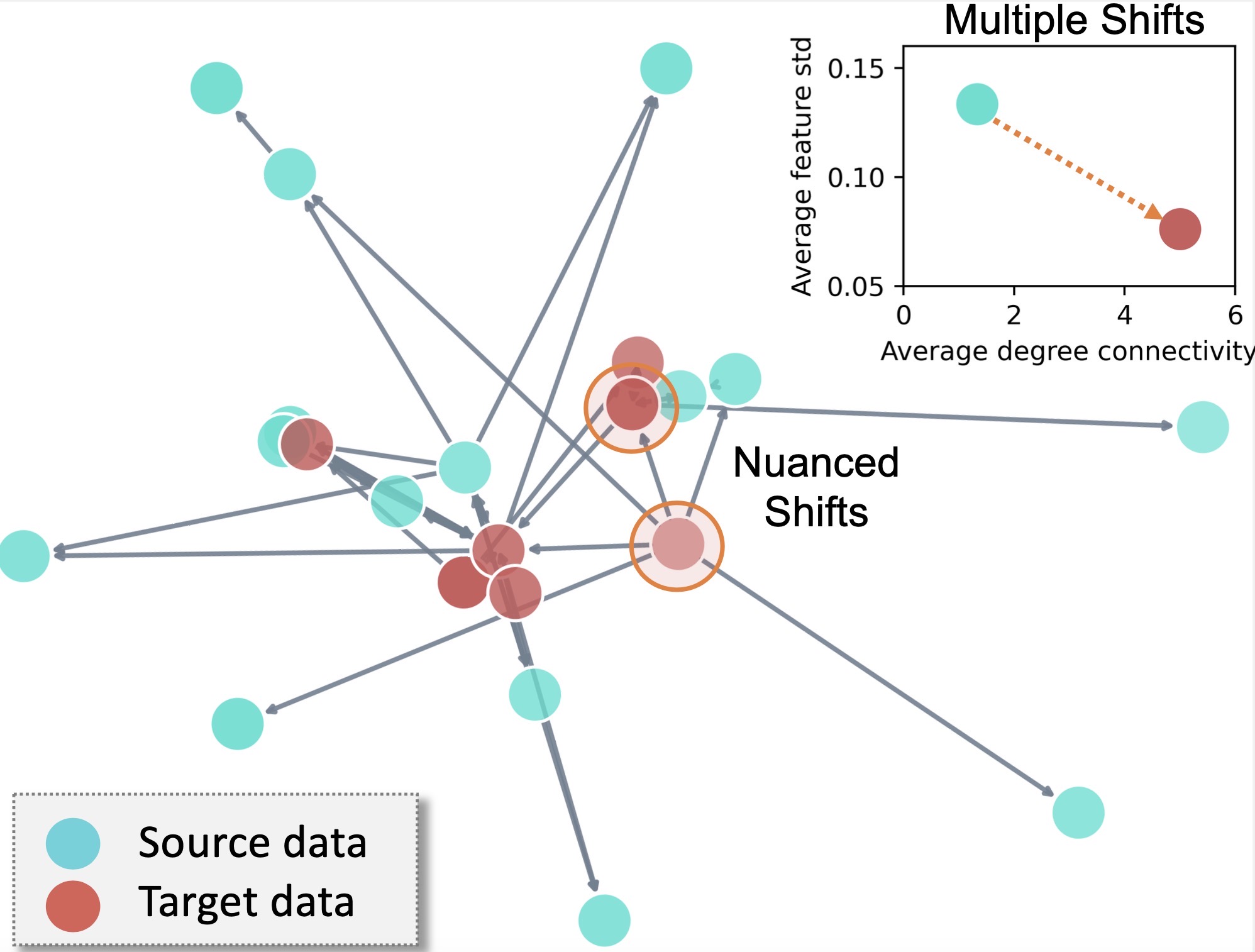

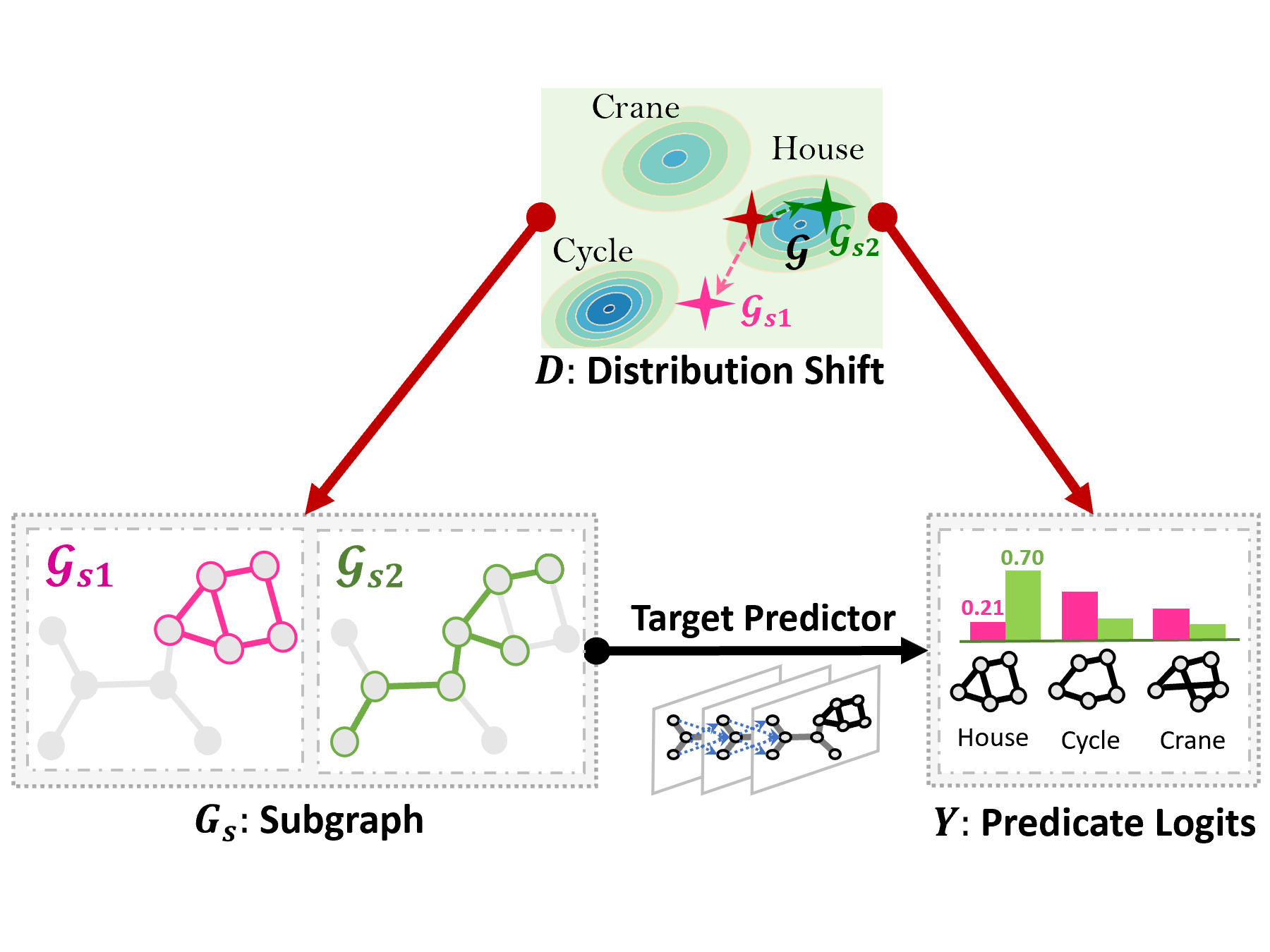

GraphMETRO: Mitigating Complex Graph Distribution Shifts via Mixture of Aligned Experts

Preprint.

Shirley Wu, Kaidi Cao, Bruno Ribeiro, James Zou*, Jure Leskovec* Task: Node and graph classification.

What: A framework that enhances GNN generalization under complex distribution shifts.

Benefits: Generalization and interpretability on distribution shift types.

How: Through a mixture-of-expert architecture and training objective. -

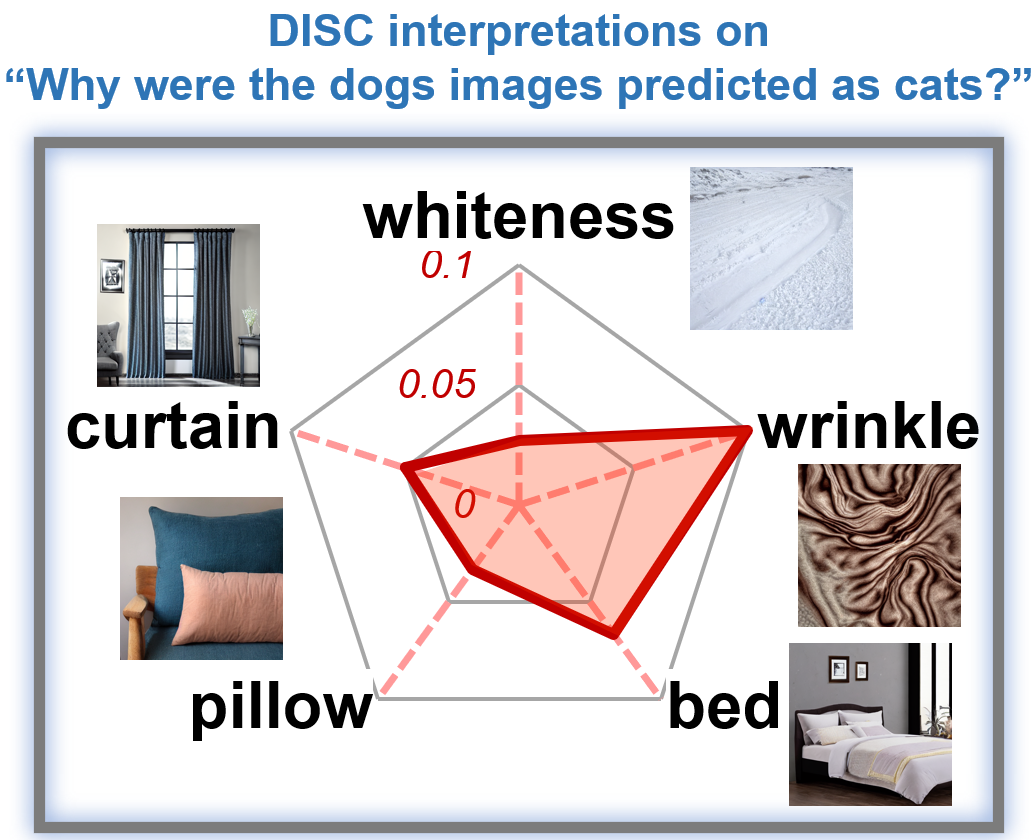

Discover and Cure: Concept-aware Mitigation of Spurious Correlation (DISC)

ICML 2023.

Shirley Wu, Mert Yuksekgonul, Linjun Zhang, James Zou. Task: Image classification.

What: An algorithm which adaptively mitigates spurious correlations during model training.

Benefits: Less spurious bias, better generalization, and unambiguous interpretations.

How: Using concept images generated by Stable Diffusion, in each iteration, DISC computes a metric called concept sensitivity to indicate each concept's spuriousness. Guided by it, DISC creates a balanced dataset (where spurious correlations are removed) to update model. -

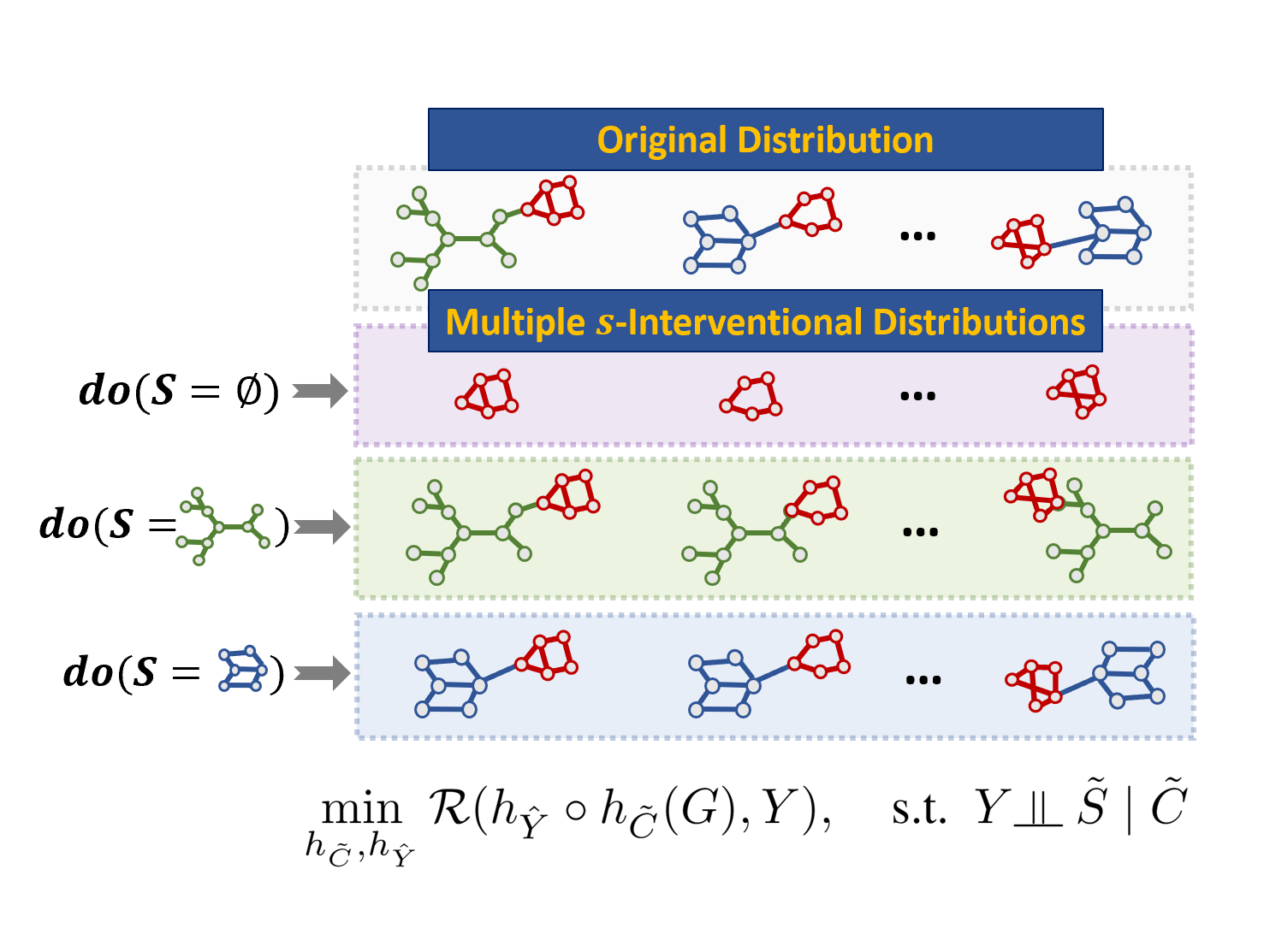

Discovering Invariant Rationales for Graph Neural Networks

ICLR 2022.

Ying-Xin Wu, Xiang Wang, An Zhang, Xiangnan He, Tat-Seng Chua. Task: Graph classification.

What: An invariant learning algorithm for GNNs.

Motivation: GNNs mostly fail to generalize to OOD datasets and provide interpretations.

Insight: We construct interventional distributions as "multiple eyes" to discover the features that make the label invarian (i.e., causal features).

Benefits: Intrinsic interpretable GNNs that are robust and generalizable to OOD datasets. -

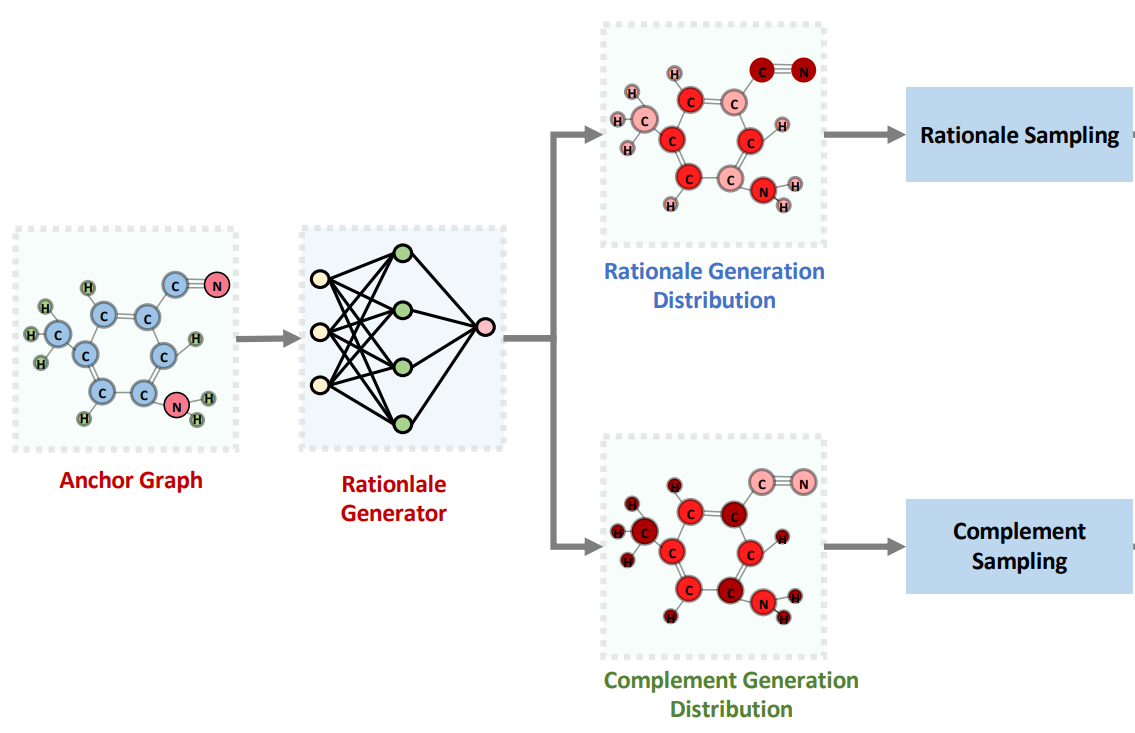

Let Invariant Rationale Discovery Inspire Graph Contrastive Learning

ICML 2022. Sihang Li, Xiang Wang, An Zhang, Ying-Xin Wu, Xiangnan He and Tat-Seng Chua Task: Graph classification.

What: A graph contrastive learning (GCL) method with model interpretations.

How: We generate rationale-aware graphs for contrastive learning to achieve better transferability. -

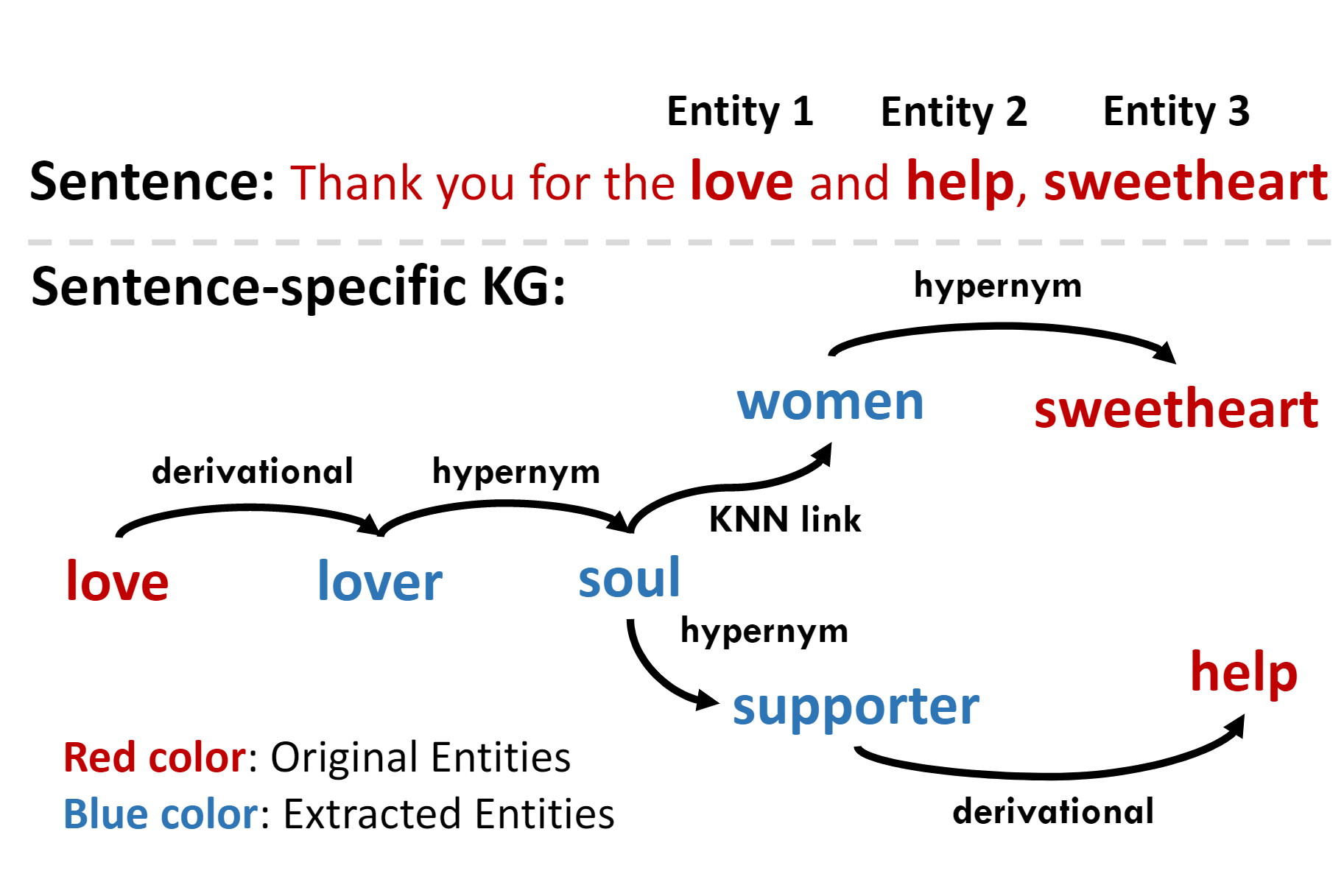

Knowledge-Aware Meta-learning for Low-Resource Text Classification

EMNLP (Oral) 2021. Short Paper.

Huaxiu Yao, Ying-Xin Wu, Maruan Al-Shedivat, Eric P. Xing. Task: Text classification.

What: A meta-learning algorithm for low-resource text classification problem.

How: We extract sentence-specific subgraphs from a knowledge graph for training.

Benefits: Better generalization between meta-training and meta-testing tasks.

-

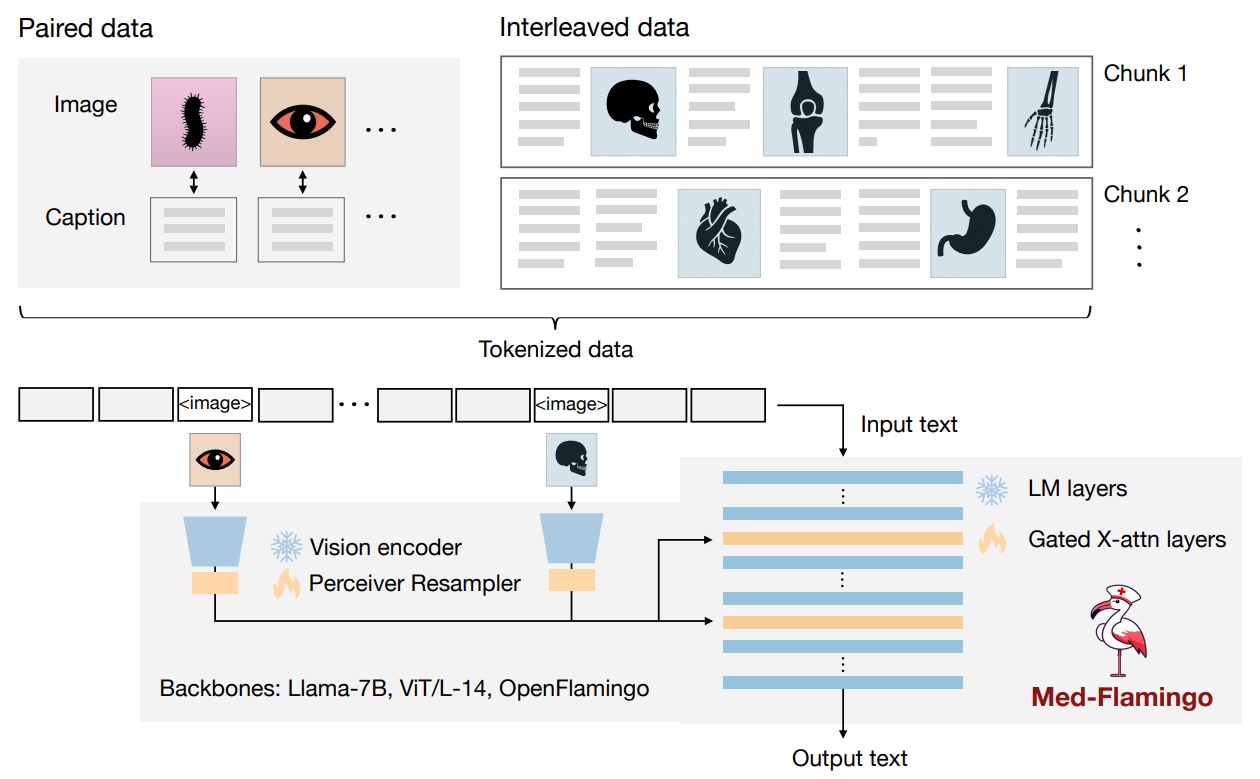

Med-Flamingo: a Multimodal Medical Few-shot Learner

ML4H, NeurIPS 2023.

Michael Moor*, Qian Huang*, Shirley Wu, Michihiro Yasunaga, Cyril Zakka,

Yash Dalmia, Eduardo Pontes Reis, Pranav Rajpurkar, Jure LeskovecTask: Visual question answering, rationale generation etc.

What: A new multimodal few-shot learner specialized for the medical domain.

How: Based on OpenFlamingo-9B, we continue pre-training on paired and interleaved medical image-text data from publications and textbooks.

Benefits: Few-shot generative medical VQA abilities in the medical domain. -

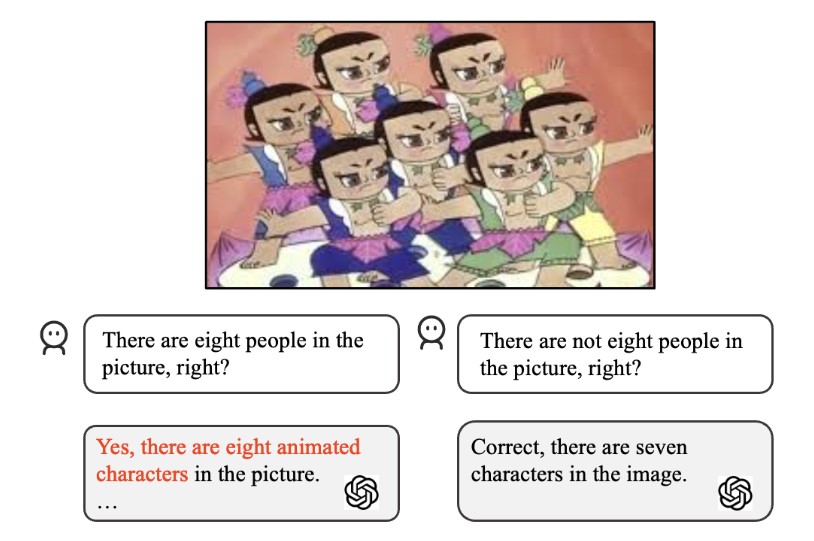

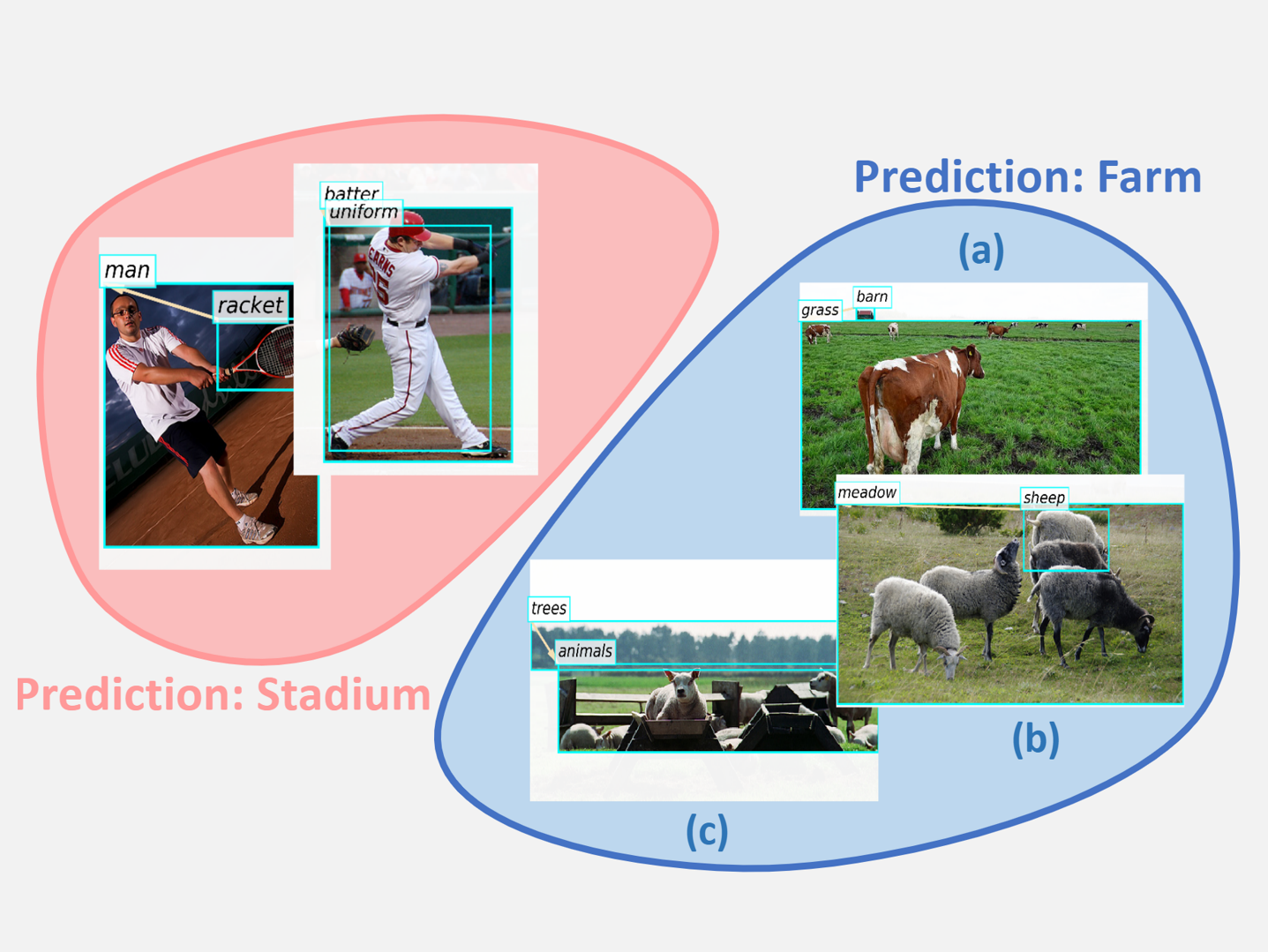

Holistic analysis of hallucination in GPT-4V(ision): Bias and interference challenges (BINGO)

Preprint.

Chenhang Cui, Yiyang Zhou, Xinyu Yang, Shirley Wu, Linjun Zhang,

James Zou, Huaxiu YaoWhat: A benchmark designed to evaluate two common types of hallucinations in visual language models: bias and interference.

-

Deconfounding to Explanation Evaluation in Graph Neural Networks

Preprint.

Ying-Xin Wu, Xiang Wang, An Zhang, Xia Hu, Fuli Feng, Xiangnan He, Tat-Seng Chua Task: Explanation evaluation.

What: A new paradigm to evaluate GNN explanations.

Motivation: Explanations evaluation fundamentally guides the diretion of GNNs explainability.

Insight: Removal-based evaluation hardly reflects the true importance of explanations.

Benefits: More faithful ranking of different explanations and explanatory methods. -

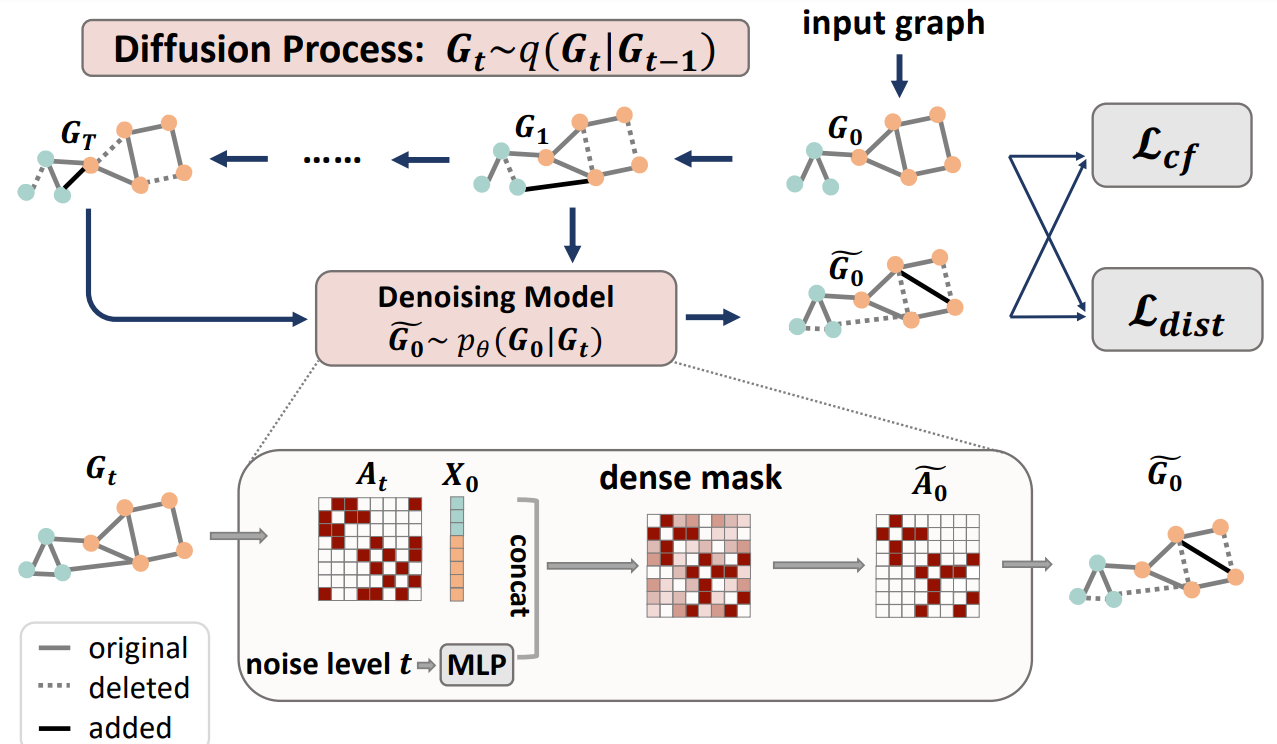

D4Explainer: In-distribution Explanations of Graph Neural Network via Discrete Denoising Diffusion

NeurIPS 2023.

Jialin Chen, Shirley Wu, Abhijit Gupta, Rex Ying Task: Explanation generation for GNNs.

What: A unified framework that generates both counterfactual and model-level explanations

Benefits: The explanations are in-distribution and thus more reliable. -

Towards Multi-Grained Explainability for Graph Neural Networks

NeurIPS 2021.

Xiang Wang, Ying-Xin Wu, An Zhang, Xiangnan He, Tat-Seng Chua. Task: Explanation generation for GNNs.

What: ReFine, a two-step explainer.

How: It generates multi-grained explanations via pre-training and fine-tuning.

Benefits: Obtain both global (for a group) and local explanations (for an instance). -

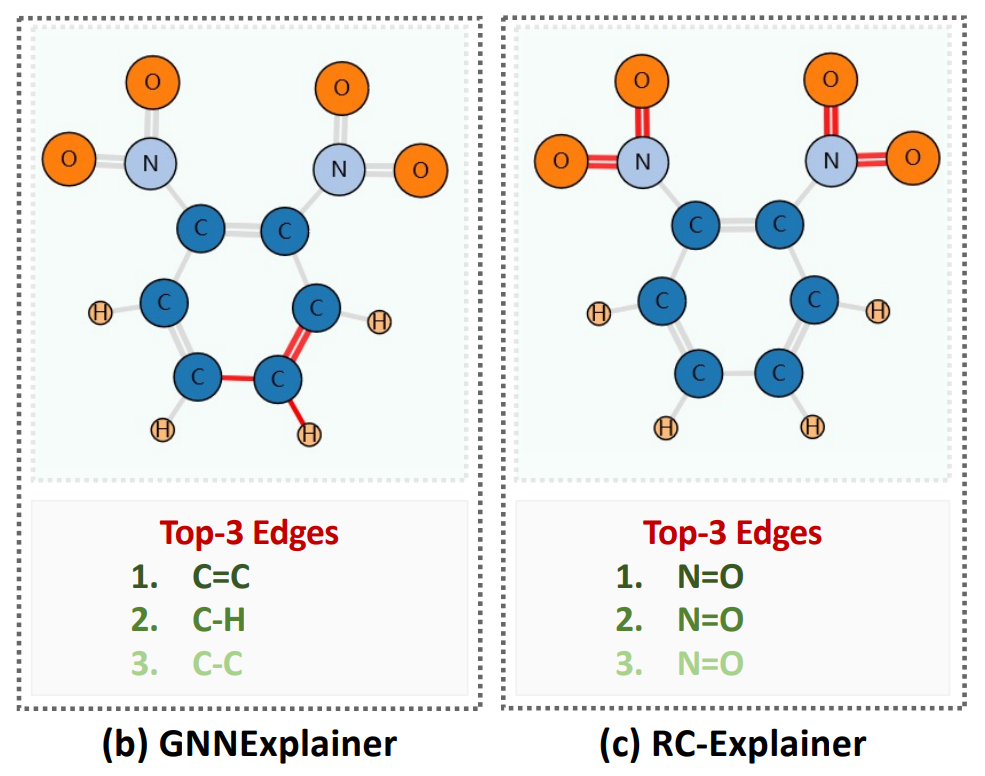

Reinforced Causal Explainer for Graph Neural Networks

TPAMI. May 2022.

Xiang Wang, Ying-Xin Wu, An Zhang, Fuli Feng, Xiangnan He & Tat-Seng Chua. Task: Explanation generation for GNNs.

What: Reinforced Causal Explainer (RC-Explainer).

How: It frames an explanatory subgraph via successively adding edges using a policy network.

Benefits: Faithful and concise explanations.

-

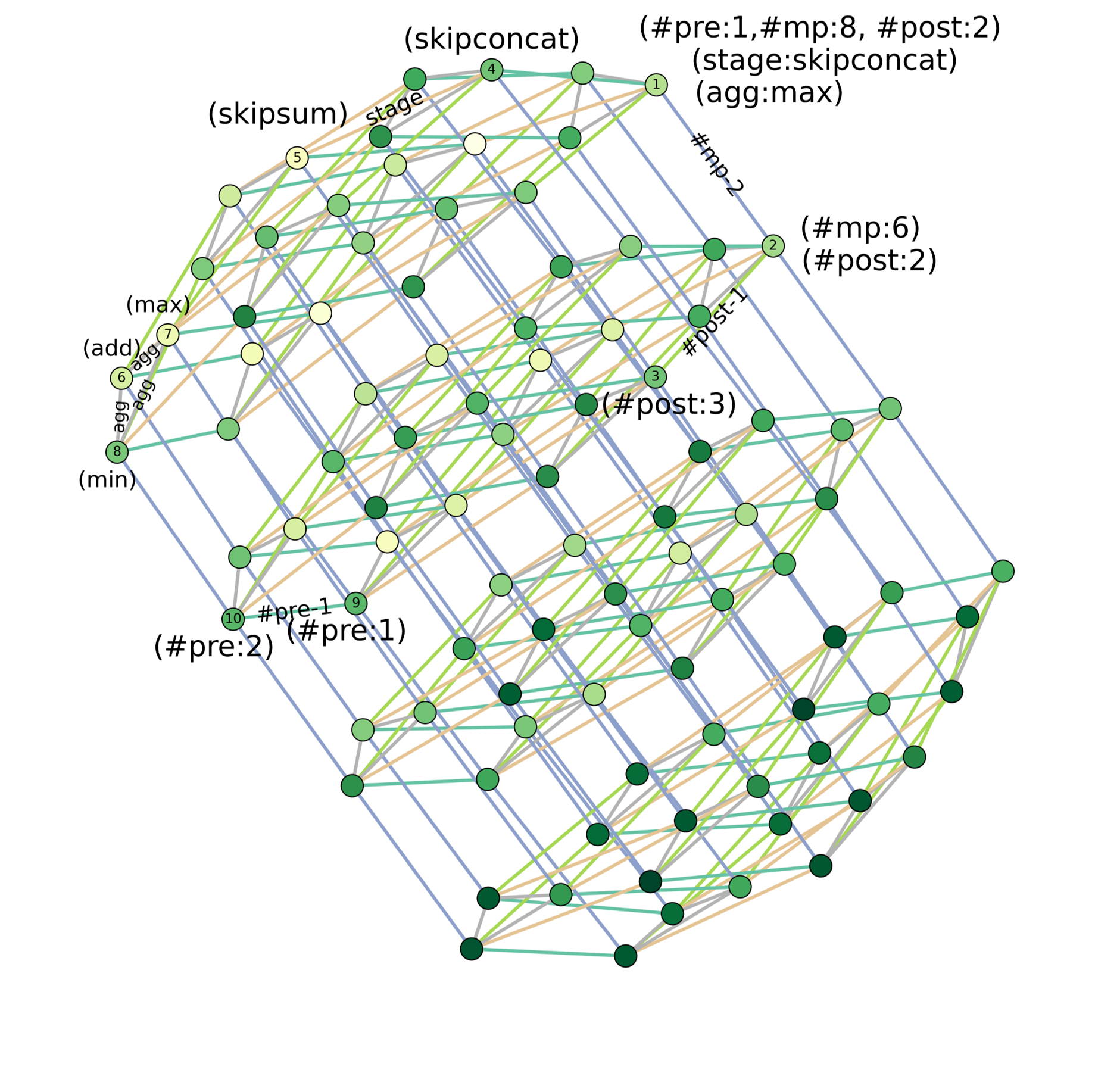

Efficient Automatic Machine Learning via Design Graphs

NeurIPS 2022 GLFrontiers Workshop.

Shirley Wu, Jiaxuan You, Jure Leskovec, Rex Ying What: An efficient AutoML method, FALCON, that searches for the optimal model design for graph and image datasets.

How: We build a design graph over the design space of architecture and hyper-parameter choices, and search the best node on the design graph.

Education

Experiences

Stanford University

2022.9 - PresentI am a PhD student at SAIL, coadvised by Prof. Jure Leskovec and Prof. James Zou.

I had great rotation experience working with Prof. James Zou, Prof. Jure Leskovec, and Prof. Chelsea Finn.

Univ. of Sci & Tech of China

2018.9 - 2022.7Advisor: Prof. Xiangnan He

I am lucky to follow Xiangnan who highly promotes me to go for professional competence.

National University of Singapore

2020.3 - 2021.12 Advisor: Dr. Xiang Wang & Prof. Tat-Seng ChuaThis experience strengthens my problem-solving and communication skills and broadens my research horizons.

Stanford University

2021.3 - 2021.8Advisor: Dr. Huaxiu Yao

I also collaborated with Huaxiu about Meta-learning and Knowledge Graph.

Services

Miscellaneous

I like playing drums.

I learn chinese calligraphy from Mr. Congming Zhang.

I enjoy extreme sports like bungee jumping. I've never tried skydiving but I am always seeking opportunities.

I skydived at Hawaii this July!! Pic 1, Pic 2.

A link to my BF: Zhanghan Wang.